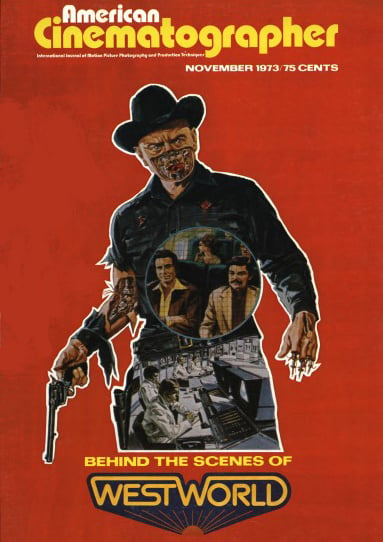

Creating the Special Effects for Westworld

The inventive approach to rendering a killer robot’s electronic POV for this imaginative sci-fi tale.

By John Whitney, Jr.

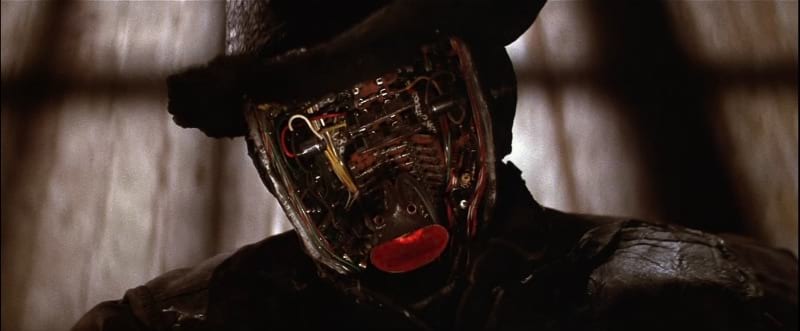

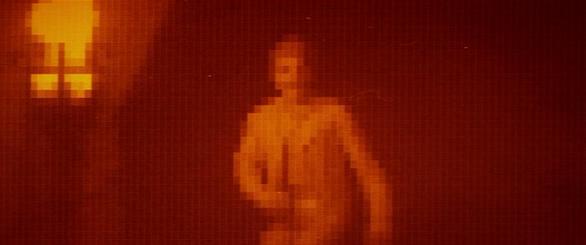

When I met Brent Sellstrom, the post-production supervisor of Westworld, he had a problem: to find a technique to represent, on film, the point of view of a machine. The script called for the audience to see the world as a robot gunfighter, played by Yul Brynner, saw it. This "Robot POV" was supposed to consist of a series of animated colored rectangles. It could not be done by any known special-effects technique. Something new was required.

It occurred to me that the scanning digitizing methods employed by NASA’s Jet Propulsion Laboratory on their Mariner Mars flybys could be used here. Basically, in this system, an image is broken down into a series of points, and the gray-scale value for each point determined. A numerical value can then be assigned to each point, and a new image reconstituted electronically. Similar techniques have been devised in computer science to enable computers to "read" handwriting, X-rays, seismic data, and so on. It is the kind of technology that allows a computer to tell the difference between a "P" and an "R".

Once the computer has "read" the image and converted it to a series of numbers, there is tremendous flexibility in what the computer can then do with this numerical information. The image can be reconstituted with different contrasts, different resolutions, different colors. We can enlarge, stretch, squeeze, twist, rotate it, position it in space in any way. In fact, the only limitations are imposed by the creative talents of the person operating the machine.

That is, if such a machine is available at all. This was my first problem: tracking down scanning and playback hardware. At first it appeared that I would have to do all the scanning on a machine in Houston, and all the playback at the Jet Propulsion Laboratory in Pasadena. That was an inconvenient solution, and also expensive: JPL estimated that machine time for playback would cost $100,000 — for two and a half minutes of motion-picture film!

In any case, that was five times my total budget, so another approach was required. Quite by chance, while doing some other work at Information International, Inc., in Los Angeles, I discovered that they had developed, over the last four years, a prototype image processing system suitable for my needs. Critical was the fact that their hardware could handle sprocketed 35mm film with the necessary registration.

That was a lucky break.

After making financial arrangements with the Information International management, I began work on the programs with Russ Ham and Dean Anschultz of that company. To their credit, the rapidly wrote excellent and flexible programs.

These programs instructed the computer to scan production footage, frame-by-frame, and convert it to numerical information. The computer then clumped the information to produce a new image composed of an array of squares. (Actually, the computer constructed an image of rectangles, but because the production footage was shot in Panavision, when unsqueezed, it would appear as squares.)

I then entered a crucial phase of testing. Although we knew, in principle, that the system would work, there were major questions about how best to achieve the desired effect. An early consideration was how large to make our rectangles — in other words, the resolution of the image. We wanted coarse resolution, but obviously the perceived resolution in a theater depends on the size of the projected image and the viewer's distance from the screen. I made several projection tests in different-sized theaters, and from this determined the best resolution for our purposes. That turned out to be an image composed of 3600 rectangles.

Our next problem was shooting criteria: we had to decide what qualities of production footage were important for a good computerized image. (Since all the computer work would be done in postproduction, this was very important.) We found the system worked best for medium and close shots; that it worked best for lateral action; that it worked best for shots with good contrast and color separation.

These considerations led us to make up special costumes for our principals — all-white costumes for dark backgrounds; black costumes for light backgrounds; and red costumes for exterior shots. Production footage intended for the computer would be shot with actors wearing these costumes.

“It was a slow business, requiring about one minute per frame, or about eight hours for a 10-second sequence.”

This testing phase ran for two months, and then the first scenes began to arrive from the film. As they came in, I had the MGM optical department make black-and-white color separations from the camera negative. Each separation was individually scanned, data processed and stored on tape. The next stage was tape playback on a high-resolution oscilloscope, and the resulting image rephotographed. This process was done frame-by-frame, for each color separation, for each scene.

But it was also exciting to be there, especially during the scanning. You would be sitting in this big room with the lights off and all this equipment around you, and there would be several monitors — graphics display terminals — all showing the newly digitized image at the same time so wherever you looked you would see the image. It was quite amazing, going from the analog world of 35mm to this digital, electronic world. We had our usual share of foul-ups and mishaps. Contrast control was very delicate, because it had two phases — contrast was partially determined in scanning, and partially in re-photography of the digitized image. Because we were working with color separations, a shift in contrast altered our final colors. This made it very tricky.

Once I had the re-photographed color separations, I took them to my own optical printer to recombine them. Here I had more testing, and further discussions with the director on how they should be recombined. After a great deal of testing with different filters, and printing the records different colors, we settled on an approach that would give the most natural colors.

Even so, I was making adjustments in each sequence, right up to the time of negative cutting. There are 14 computer sequences in the final film, and each was really treated individually. On some, contrast had to be changed; on some, we zoomed electronically; on some, we increased resolution; and on four sequences we printed only the red record.

Toward the end, we were operating under terrible time pressures, and from my standpoint some of that is reflected in the color quality of the final prints. However, audiences seem to respond enthusiastically and well.

As time goes on, and the computer systems which do this work become faster and cheaper and smaller, it should be possible to think of an "electronic optical printer" with broad applications in feature films, commercials — in fact, any visual area. Actually, my work on Westworld suggested many more possibilities than we were able to explore, and there are certainly many others yet to be imagined.

For more on Michael Crichton’s classic, check out our interview with the writer/director and Westworld: A State of Mind?, penned by the film's cinematographer, Gene Polito, ASC.